Towards Wearable, High-Performance

Augmentd Reality Displays:

Currently, AR glasses with half the eye resolution and a tiny field of view and a small eyebox requiring helmet-like head mountings, are still hyped as progress. But AR needs way better technology, it should be as light and convenient as the best state-of the-art eyeglasses, deliver high resolution and a very large field of view.

In this paper (latestPDF; web page version), we propose curved and translucent on-axis-displays as a perfect solution, fulfilling all of the requirements*.

Note: the paper was updated July 2024 in the introduction and the eyebox, dynamic focus, ans power consumption (new) sections. Get the new one!

A complete developer toolkit with detailed math, Blender 3D models and tutorial videos can be downloaded here, as well as from displaysbook.info.

* All rights preserved. Patents granted. DE102014013320B4; GB2537193B, US11867903B2.

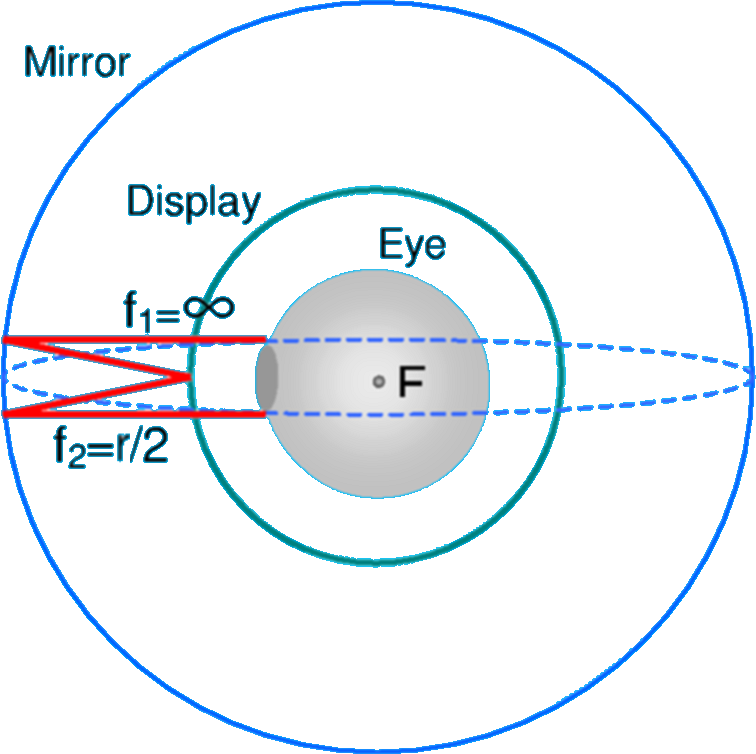

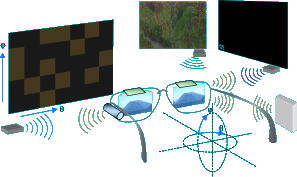

Optics w. concentric mirror and display spheres

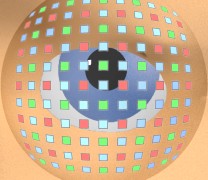

Spherical transparent display (pixels extremely enlarged)BTW, did you notice AR is really taking off now? Ori Inbar's site has a comprehensive report.

January, 2017

Second Edition of "Displays - Fundamentals and Applications" - now available, with lots of updates, enhancements and new content, with emphasis on 3d and near-eye displays (NED).

The book's website (alternative link) provides supplemental materials for non commercial use, including all illustrations as well as a Blender model and video tutorials about simulating NED mirror optics (on-axis-NED, as below).

Free ebooks: "The End of Hardware" and "Displays - Fundamentals and Applications, 1st edition" are now available as free ebooks for non commercial use (click on the respective images).

August, 2012

New ideas: learn more about intelligent displays and new concepts for AR glasses.

Intelligent displays Architectural considerations Infinity is simple!

July, 2011

New book: The end of Hardware has been both successful and influential on the subject of the field of Augmented Reality since it first appeared in 2006. Several leading AR researchers are declared fans of this book. It is still the only book which discusses almost any imaginable application of AR in a scientific way, and it also covers and analyzes new display technologies necessary for their realization.

As a next step it appeared logical to create a book covering display technology in its entirety, the fundamentals as well as current and future applications in all areas.

If you are already familiar with AR and your main interest is the ultimate display solution, this new book, titled DISPLAYS, may be for you. It's first official presentation is at the SIGGRAPH in August 2011. In 2015, a Korean edition was published.

Note that most of the near-to-eye-display topics covered in Displays can also be found in The End of Hardware.

2009 - 2011

Added website content , find it here and here, for example.May, 2009

The End of Hardware, 3rd edition: read more about it here. Note that the subtitle has changed for this edition, from "A Novel Approach to Augmented Reality " to "Augmented Reality and Beyond".Sept. 17, 2008

Invited keynote talk, given by the author at the 7th international IEEE/ACM Conference on Mixed and Augmented Reality, ISMAR2008: " Near Eye Displays - A Look into the Christmas Ball " (yes, not 'crystal ball': "once upon a time, I actually broke some Christmas balls to experiment with concave mirrors as could be used in near eye displays"). Also read this report about the keynote talk, by Ori Inbar (PDF version).The author's personal impressions from the ISMAR08: In the poster show, "Wearable Augmented Reality System using Gaze Interaction" by Hyung Min Park, Seok Han Lee, Jong Soo Choi,

demonstrated eye control, addressing the vital issue on how to trigger events just by gazing. The poster showed the dwell time approach (rest on an item for second and you mean something), a variation they named 'aging', and an approach to support this by 'half blinking'.

The first video is about the aging approach, and here's a second one about half blinking (if you have difficulties playing mp4, get VLC player, it is available for almost any OS and even in a non-install portable version). Most remarkable, they even built the eye tracker themselves (picture)...

Among the demonstrations, there were "The Haunted Book", (a book with scary animations coming to life when you read through the pages) and the "Magic Book" (a family friendly one), both by Camille Scherrer, Julien Pilet, Pascal Fua, Vincent Lepetit, using a software basis developed at ETH. These demos showed perfect integration of virtual objects into real scenes just by means of visual scene tracking. Although this was displayed using laptops, one could easily imagine how impressive this would be with near-eye-displays. Powerful demonstrations after all, that made the essence of AR immediately obvious, even to the layperson. Also a vivid proof of concept for visual scene tracking."Optical Free-Form Surfaces in Off-Axis Head-Worn Display Design" by Ozan Cakmakci, Sophie Vo, Simon Vogl, Rupert Spindelbalker, Alois Ferscha, Jannick P. Rolland, presented results on optimizing single mirror display glasses and showed that this works even if nearly 100% optical correction is required. In my opinion, most of geometric distortion or even some focus aberration can well be handled by an advanced display, so it is even more evident that perfect single mirror glasses are feasible. This work is entirely new and, astonishing enough, the references cited mostly do not predate 2007. It was really high time that this topic was taken up.

Rolf R. HainichAdded Jan. 23, 2009

Considering retina trackers, and some lines about monocular depth pointing from the ISMAR keynote.Added Nov. 23, 2008

A new piece of fiction about virtual earth, and a due adjustment concerning the safety of digital media.Added Oct. 23, 2008

Contact lens displays?? People are speculating about this. Let's try a scientific approach...

And implants? maybe a versatile variety could be available quite soon...Added August 1, 2008

Can virtual objects be really different from real ones? Offering features unknown from our common experience? - not just magic behavior, but really new properties, new kinds of user interaction? Yes, there is something that will offer entirely new experiences and still has to be explored: Ghost objects, that only appear when you not only look at them but also at their proper distance, and that you could actually dissect layer by layer with your eyes.

- read more -

A new near-eye display by Lumus Inc. uses Holographic Optical Elements (HOE's) in quite a surprising way. HOE's are less difficult to understand than one may think, and the technology is important for AR, so here is a genuine introduction, with some hints that you would not easily find elsewhere.Dated April 5, 2008

It's not the glasses phone already, but the next trend is mobiles with built-in projectors, and Microvision, according to press, is in talks with major manufacturers. Another step forward.

As one after another copy protection is cracked, even BD+ recently, the Six Postulates get proven every other day. And this will promote disc sales, indeed!

In his Keynote at the Intel Developer's Forum 2008, Patrick Gelsinger stated that Moore's Law will last for another 29 years at least. Let's talk about brain chips.Dated March 25, 2008

Although display glasses will be the most versatile and elegant solution to 3D displays, there will be large 3D screens as well. With all current approaches so far, it seems quite unrealistic that these could be more than niche products. But there may be a solution that won't cost you an arm and a leg. And there is a new remark on camera array encoding, as well as a new hint on eye trackers, employing visual flow.Dated March 10, 2008

15years ago I wrote an e-mail to Phil Zimmerman (the author of PGP, who at that time fought for your right to strong encryption), stating that computers were becoming mind extensions, so sneaking into them would equal thought control and hence violate human rights (essentially he was on the same track, in a way, as he named his program 'pretty good privacy'). Recently now, the German supreme court, in an historic decision against the 'online search' of computers, defined what equals a constitutional right to computer privacy.

What has all this to do with Augmented Reality? - Quite a lot, as anybody able to imagine (alas, not so many) could figure out all the time: the closer an information processing device is linked to our senses and the more intensely it is accompanying us anywhere, the more real the metaphor of a mind extension will get. More about the court decision for English speaking readers here and here. Anything else to know is in the book's 'Fairy tales' chapter.

Rolf R. Hainich

Dated Nov. 5, 2007

Scanning mirrors have become a major topic of research. But how to optimize them for high resolution display glasses? Here are some more details about the book's suggestions.

Dated Nov. 2, 2007

Piezo electric micro motors made rapid progress recently, and are now a technology promising to provide all kinds of perfect static and dynamic adaptation for the optics of display glasses, at stunningly low weight and power consumption..

Oct. 8, 2007

Microvision are now promoting laser scanner units for applications in wearable display glasses with mobile phone functions, as suggested earlier on this page. This and several other interesting materials and videos on the Microvision website.

Aug. 20, 2007

Added a diagram powerfully explaining the capabilities of the mask display approach.

July 15, 2007

Camera based position sensing is one key ingredient of full featured display glasses. There is a somewhat related technological approach, using a camera as a sensor for a hand held 3D input device.

Strangely enough, not a single product available so far, not even for 2D, even though the sensors of a simple optical mouse could easily do this, and demand would be tremendous because this would make couch computing a cinch.Additionally, hare are some more remarks about holographic displays, and about the future of personal video cameras (AKA camcorders).

June 5, 2007

A new approach to holographic displays has been presented by SeeReal Technologies at the Display Week 2007. We are trying a short assessment of this technology as far as the information available allows it, and its possible uses for display glasses.

An interesting near eye display is being developed at Carl Zeiss. The original press release is misleading in that the text describes an already existing conventional item, while the picture shows a design study for the envisioned future model. Nevertheless, this is a very advanced approach and it is planned to be completed quite soon.

May 1, 2007

Seeing with the ears (see below) updated: The Seeing With Sound application [58] is meanwhile available as a free software add-on for camera cell phones, hence at virtually no cost. Just imagine what could be achieved it we already had mobile phones based on augmented reality glasses. Stereo view and automatic reading triggered by eye pointing are just a few examples.

April 2, 2007

The eye operated cell phone was proposed here a year ago, optical designs from the book can easily be simplified for it, and the appropriate eye tracker is already available. No excuses. This is due now.

Some predictions from the cybertales seem to come true far earlier than anticipated: EMI has just chosen to free its entire catalog of DRM; or, more precisely, to offer anything DRM free for a little more money, and then in higher quality. So what's next ? HD movies ? Doesn't this example just show that there isn't any particular reason to have more protection for higher quality media ? Premium customers paying premium prices for premium products will never accept trash services. Is it that hard to comprehend ?

March 30, 2007

Autostereoscopic screens are seen on many occasions now. While it's not so difficult any more building these, on the basis of LC displays, the principle problems aren't getting away. This technology will not already be good enough for 3-dimensional television. Real solutions could only be personal display glasses, that could become mainstream in 10 years, or truly holographic screens that can only be expected in way over 20 years. Even then, 3D needs special recording and encoding technology quite different from the usual suspects.

Feb. 08, 2007

Consumer's biggest trouble with today's devices is that each and any of them has different control panels and operation modes. With the virtual control panel approach enabled by vision glasses, this could all be overcome: little front end programs could provide a single control panel for any microwave there is, another for any washing machine, any TV, any VCR, any car. The particular general panel(s) you prefer would entirely be your choice, and probably a host of part time programmers would set out making their own open source skins for anything there is. Virtual control panels can also be moved and operated as remotes, of course.

Today's devices by the way could also deliver the one-panel-fits-all feature, if they had a programmable touchscreen panel and if you wore a pocket computer, mobile phone or the like that would automatically tell them your 'wishes' as son as you approach.

Newsflash: new links on the cybertales page.

Jan. 25, 2007

Most bizarre, the newest HD camcorders are thick as a brick. Principally, even a HD camera can be as small as a cube of 1/8" (3mm), optics included (such a small lens can deliver the resolution required, formula here). The chip's light sensitivity may be a bit limiting, but even with encoding and 8GB of memory chips (almost 1 hr recording) included, everything could already be squeezed in a sugar cube, small enough to stick to a glasses' handle. A human head is well suspended, so tattering won't occur. This would already be a cute product. We wouldn't even need a real viewfinder, a frame mirrored into the glasses could exactly show the current image area if an eye tracker helps to compensate the parallax. The device could be combined with the eye operated cell phone as already outlined below; the display unit would need nothing better than a single color laser scanner and everything, including battery, would fit into some very light glasses. Even a single chip eye-tracker is possible [86]. No schlepping any more. Just waits to be done.

Dec. 25, 2006

The headline of this TechNewsWorld article the author contributed to, brings it to the point: "Augmented Reality: Hyperlinking to the Real World".

Nov.15, 2006

The 2nd edition is available at Amazon.com since Nov. 3, officially. As a service to customers of the first edition here are short summaries of some more important new items that haven't yet been mentioned on this page.

The extended references list is on the links page.

If you see a "3D" screen anywhere, just tilt your head sideways and you'll see it is impossible to sell this as a TV screen. Even the tiny holographic research devices built with tremendous effort so far, have horizontal rendering only. For anything but funfair attractions, i.e. for real 3D, you'll always need glasses, such as described in the book. The question remains, how to record and encode real 3D. So here are some more remarks and pictures about holographic encoding. Plus the new technique for camera arrays, deriving high resolution 3D pictures from an array of low resolution cameras.

Finally, here is a jazzed up version of the cyberwars tale.

The pages presented here are not entirely representative for the book, as they are all about media and there are a few formulas too many, added for the experts because this can't yet be found in textbooks.

Remark: some of the many ideas in the book are absolutely new. In case you want to use these in products, you may consult the Author via the reply link.

Oct. 25, 2006

2nd EditionAbout a year ago, the first edition of this book had been completed.

In there I had suggested holographic encoding instead of the object oriented MPEG extensions usually tried to represent 3-dimensional images. Back then I thought somebody certainly had already come up with this long before, as it appeared to be a very natural conclusion, but finding something in myriads of holography papers is pretty difficult. Yet a very recent article [83] indicated that the idea of holographic encoding when I published it may have been entirely new.

So I was finally justified in having written several more pages of explanation on it meanwhile, originally more driven by the fact that most literature I found presents the basics of holography in a quite unsatisfactory way, for this purpose at least.

Not the only reason to make a new edition: in the media chapter, a new technique is presented that will make camera arrays a lot more economical. Some interesting little items have been added to the applications and especially to the fiction chapter. The concept of the mask display - as simple as it may be - is still ahead of time [77] and deserved a few additions. Several new references that had meanwhile been added to the book's website, as well as the comments below on this very page here, also went into the book of course, and a more detailed explanation on holographic laser scanners. The formula given for laser beam deviation suffered from a typo, actually deviation will turn out bigger, which does not change the conclusions, but we would require even bigger scan mirrors. The new derivation added about lens resolution also applies to beam deviation.

The method of orientation by interlinked meaningless image detail I think I managed to explain even better now - albeit the 'End of Hardware' is more about hardware, the final hardware so to say, than about software. What we need real soon now is a vision interface hardware, small and versatile enough for personal use, an equivalent of the PC, to create everyday applications on it. Without the PC, there wouldn't be any noteworthy software industry by now, or would it.

By end of July, the new edition had essentially been compiled and it should be in stores by mid November. It now has 300 pages and 145 Illustrations.

April 15, 2006

Seeing with the earsI wrote about this on page 188 of the book; important enough to extend it a bit here:

Generating spatial sound impressions complying with the pictures of virtual objects is something that's necessary for a vivid impression with both virtual devices and media, and definitely needs to be a component of the vision simulator's software. This leads to another thought: we could also generate sounds just from the images, letting things start to hum, whistle or crackle.

Entirely weird? Not at all. There are people who can't see but have learned to orientate themselves just by making noises and listen to the echoes. It can work extremely well. Even a special generator for these echo blimps is already available.

Yet couldn't it allow for an even better sound image if we just use the camera images and generate spatial sound as if the objects were actively emitting it? Spatial discrimination improves a lot if we can turn our head and listen for the changing sounds. Many things already have a very characteristic sound image (knock at them and you know). We could also encode object color, size and speed with different sounds. So vision simulator technology could even provide for a very efficient and affordable implementation of solutions for visually impaired people. Imagine the cameras recognizing signs and inscriptions from ordinary objects and make them sound different. Eye or finger pointing could then tell the device to read the text. The cameras could recognize persons from a distance, recognize many other things and just tell about them, and so on.

This is all not as far fetched as it may first appear, and indeed there is already at least one project with similar objectives [59].Let's consider this further with the vision simulator in mind: many visually impaired people are still able to use eye pointing to a certain degree of accuracy. Imagine different applications windows arranged in 3D space clearly separated, sounding according to status or content, also clearly separable, and reacting to pointing by reading their contents or accepting entries, perhaps with a mouse pen. Imagine a person wearing the specialized vision simulator device taking a book, opening it, and the position cameras would right away start 'reading' it loud to the earphones. With eye pointing, the device would also know when to start reading and if at the left or the right page. In addition, finger pointing could be used to select certain paragraphs or sentences. All absolutely intuitive, ergonomical and natural.

Using the position cameras for general orientation needs some additional thoughts. Simply recognizing locations, what we typically require of a vision simulator, does not require to identify objects in a way that would determine their nature or meaning. It does not even require separating different natural objects from each other. Meaningless scene details are entirely enough.

Image recognition in the classical sense, as we would require for an intelligent orientation support system, is different. We would need a lot more sophisticated software and probably some support in case of ambiguities in the image interpretation (a glass door, for example is a difficulty), or in case of insufficient light. In these situations, an additional depth sensor - maybe a simple ultrasound device - would help quite a lot. Many things to develop, yet certainly way easier than creating the complete perfect vision simulator, especially because we won't need those high end displays here.There is also an amazing project with an entirely different approach, as it translates pixel patterns directly into spectral patterns, sort of a sweep signal [58]. Surprisingly, people can learn to interpret these patterns very well, and actually 'see' the image that a camera delivers. It's astonishing that this works at all, and once more it reveals how flexible a human brain can adapt to the environment, although the learning curve seems to be pretty steep. Currently this works with a single head camera and is about to be extended to stereo view and more. This may as well be a basis to add some of the features discussed above, like automatic object recognition or classification, automatic reading, spatial sound impressions and so on.

Applications like this could lead to very good products very fast, so they are directly interesting to businesses, and basing them on a widely used, common vision simulator technology would make these things more affordable as well.

Currently, a lot of research effort is invested in encoding stereo pictures with conventional MPEG standards and/or mesh/texture based approaches. Yet no matter how hard you try, this will always have a tendency to resemble puppet theater. Holograms instead contain all 3D picture information that could be seen, reproduce all perspectives, even fog, lenses, mirrors, and natural focus. Could they be used for encoding and transmission ?

The answer is: simply and perfectly, even without requiring a lot more bandwidth. How? No idea? Here's how. More in the book.

home order

Copyright © 2006-2011 Rolf R. Hainich; all materials on this website are copyrighted.

Disclaimer: All proprietary names and product names mentioned are trademarks or registered trademarks of their respective owners. We do not imply that any of the technologies or ideas described or mentioned herein are free of patent or other rights of ourselves or others. We do also not take any responsibility or guarantee for the correctness or legal status of any information in this book or this website or any documents or links mentioned herein and do not encourage or recommend any use of it. You may use the information presented herein at your own risk and responsibility only. To the best of our knowledge and belief no trademark or copyright infringement exists in these materials. In the fiction part of the book, the sketches, and anything printed in special typefaces, names, companies, cities, and countries are used fictitiously for the purpose of illustrating examples, and any resemblance to actual persons, living or dead, organizations, business establishments, events, or locales is entirely coincidental. If you have any questions or objections, please contact us immediately. "We" in all above terms comprises the publisher as well as the author. If you intend to use any of the ideas mentioned in the book or this website, please do your own research and patent research and contact the author.